MIT scientists have developed a novel approach to analyzing time series data sets using a new algorithm, termed state-space multitaper time-frequency analysis (SS-MT). SS-MT gives a structure to dissect time arrangement information progressively, empowering analysts to work in a more educated manner with extensive arrangements of information that are nonstationary, i.e. at the point when their qualities develop after some time.

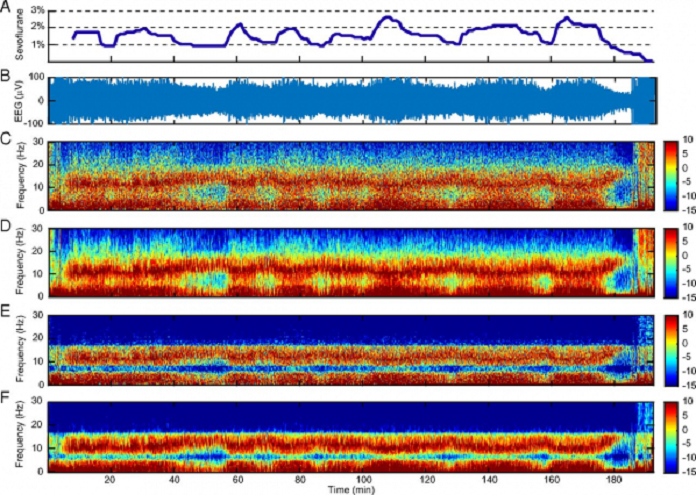

Using a novel analytical method they have developed, MIT researchers analyzed raw brain activity data (B). The spectrogram shows decreased noise and increased frequency resolution, or contrast (E and F) compared to standard spectral analysis methods (C and D). Image courtesy of Seong-Eun Kim et al.

It is important to measure time while every task such as tracking brain activity in the operating room, seismic vibrations during an earthquake, or biodiversity in a single ecosystem over a million years. Measuring the recurrence of an event over some stretch of time is a major information investigation errand that yields basic knowledge in numerous logical fields.

This newly developed approach enables analysts to measure the moving properties of information as well as make formal factual correlations between discretionary sections of the information.

Emery Brown, the Edward Hood Taplin Professor of Medical Engineering and Computational Neuroscience said, “The algorithm functions similarly to the way a GPS calculates your route when driving. If you stray away from your predicted route, the GPS triggers the recalculation to incorporate the new information.”

“This allows you to use what you have already computed to get a more accurate estimate of what you’re about to calculate in the next time period. Current approaches to analyses of long, nonstationary time series ignore what you have already calculated in the previous interval leading to an enormous information loss.” ……

Full post: https://www.techexplorist.com/novel-algorithm-enables-statistical-analysis-time-series-data/

Abstract: http://www.pnas.org/content/early/2017/12/15/1702877115